So, you want to experiment with the latest pen-testing tools, or see how new exploits effect a system? Obviously you don’t want to do these sorts of tests on your production network or systems, so a security lab is just the thing you need. This article will be my advice on how to build a lab for testing security software and vulnerabilities, while keeping it separate from the production network. I’ll be taking a mile high overview, so you will have to look into much of the subject matter yourself if you want a step by step solution. I’ll try to keep my recommendations as inexpensive as possible, mostly sticking to things that could be scrounged from around the office or out of a dumpster. The three InfoSec lab topologies I’ll cover are: Dumpster Diver’s Delight, VM Venture and Hybrid Haven.

Dumpster Diver’s Delight: Old hardware, new use

The key idea here is to use old hardware you have lying around to create a small LAN to test on. Most computer geeks have a graveyard of old boxes friends and family have given them, and businesses have older machines that are otherwise condemned to be hazardous materials waste. While what you will have to gather depends on your needs, I would recommend the following:

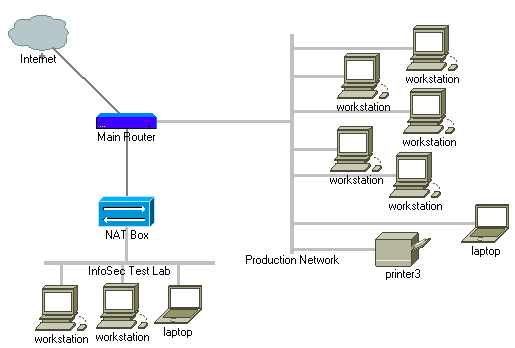

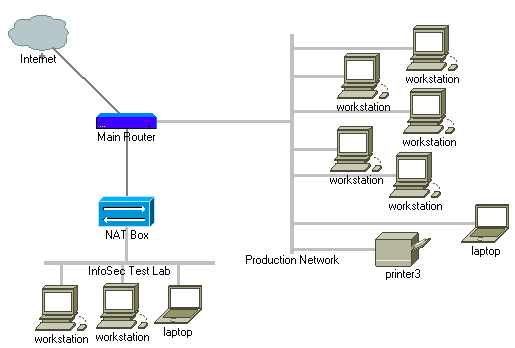

1. A NAT box: Any old cable/DSL router will work, or you can dual home a Windows on Linux box for the job and set up IP Masquerading. The reason you want to set up a separate LAN with a NAT box is so that things you do on the test network don’t spill over onto the production network, but you can still access the Internet easily to download needed applications and updates. Also, since you will likely have un-patched boxes in your InfoSec lab so you can test out vulnerabilities, you don’t want them sitting on a hostile network and getting exploited by people other than you. You can punch holes into the test network by using the NAT router’s port forwarding options to map incoming connection to SSH, Remote Desktop or VPN services inside of the InfoSec lab. This way you can sit outside of the InfoSec LAN at your normal workstation on the production LAN, and just tunnel into the InfoSec lab to test things.

2. A bunch of computers/hosts: Whatever you want to test, be it computers, print servers or networking equipment. Boxes for a security lab do not have to be as up to snuff as production workstations. If you are doing mostly network related activities with the hosts, speed becomes less of an issue since you aren’t as annoyed by slow user interfaces.

3. A KVM (Keyboard/Video/Monitor) or plenty of monitors: Use what you have, but my recommendation is to get a KVM switch since it will take up less space and consume less power than having a monitor for each computer.

The problem with the “Dumpster Diver’s Delight” approach is it takes up a lot of desk space. Also, if you are conscious of your monthly power bill you may not want to run a whole lot of boxes 24x7.

VM Venture: One big box, one little network

Why not have one powerful box instead of a bunch of old feeble ones? VMs (Virtual Machines) allow you to have your one workstation act as many boxes running different Operating Systems. I’ve mostly used products from VMware, but Microsoft Virtual PC, Virtual Box, QEMU or Parallels may be worth looking into depending on the platform you prefer. I personally recommend VMware Player and VMware Server, both of which are free:

http://www.vmware.com/products/player/

http://www.vmware.com/products/server/

VMware Server has more features (VM creation, remote management, revert state, etc.), but I’ve found it to run a little slower than VMware Player. The way VMware works is you have a .VMX file that describes the virtual machine’s hardware, and .VMDK file(s) that act as the VM’s hard drive. Setting up your own VMs is easy, and I have videos on my site about it:

http://irongeek.com/i.php?page=security/hackingillustrated

Also check out some of VMwares pre-made VMs:

http://www.vmware.com/vmtn/appliances/

Using VMware has some huge advantages:

1. Did a tested exploit totally hose the box? Just revert the changes or restore the VM from a backup copy.

2. The VM is well isolated to the point that malware has a hard time getting out. Yes, there is research into malware detecting and busting out of VMs, but VMs still add an extra level of isolation.

3. It’s a great way to test out Live CDs/DVDs without taking the time to burn them.

4. VMware presents itself as pretty generic hardware, so installing an Operating System is pretty easy since you don’t have to play driver bingo like you would with some older hardware. That said, installing VMware Tools add-on into your VMs will help make them far more functional.

5. You can configure a virtual network in one of three modes to allow you to have a virtual test network, all on one box:

Bridged: The VM acts as if it’s part of your real network. Useful if you follow the hybrid approach I’ll mention later.

NAT: Your VM is behind a virtual NAT router, protecting it from the outside LAN, but still allowing other VMs ran on the same machine to contact it.

Host-Only: You would want to choose this option if you don’t want the VM to be able to bridge to the Internet using NAT. It would be a good idea to use this option if you are testing out any worm or viral code.

Now you have an InfoSec test network on just one machine, making a much smaller desktop footprint and most likely consuming less power. The big thing to keep in mind when you plan to use VMs for your lab is memory. You want as much

RAM as possible in your test machine so you can split it between the different VMs you will be running simultaneously. Depending on how you pare down the Operating Systems installed in your VMs, you will need different amounts of memory. I recommend dedicating the following amounts of RAM to each VM:

Linux 128MB: Could be more or less depending on the desktop interface you use and what services you decide to run.

Windows 9x, 64MB: It should feel quite spry.

Windows 2000/2003/XP, 128MB: yes, you would want more if you can get it, but you can get away with 128MB if necessary.

Windows Vista, 256MB: Don’t send me hateful emails, it can be done. You have to set it to at least 512MB to install Vista, but thereafter you can shrink it down to only 256MB. It’s ugly, but it works.

So, lets say you want to have Ubuntu Linux, Windows 2003 Server, XP and Vista all running at the same time as the guest Operating Systems, while Windows XP is used in the background as VMware’s host OS. That would be 128+128+128+256 = 640MB on top of whatever the host OS needs. Plan on getting at least 2GB of memory for your VM box if you can afford it.

Also, as your VMs’ hard drives start to fill up, the .VMDK file will swell, so a large hard drive will be needed. The CPU is not as big an issue as you might think, but faster is always better, so go dual core if you can and look into getting a processor that supports AMD virtualization (AMD-V) or Intel VT (IVT).

http://en.wikipedia.org/wiki/X86_virtualizationHybrid Haven: Best of both worlds

There’s no reason why you have to take just one of the above approaches. If the VMware host box is put on the same LAN as the rest of the test network, and the VMs are set to use the Bridged networking option, then you can use both approaches at the same time to create a diverse test network.

Conclusion In this article I’ve covered how to use spare computers and VMs to create an InfoSec testing environment. I hope you have found this article useful. Most of my advice only helps if you are testing out the security of workstations and server Operating System, services and applications. I’d love to hear from anyone having advice on learning about higher end routers and switchs without having access to the real thing.